June 18, 2023

4 min read

3 LLM Research Papers That Every Data Scientist Must Read

LLM's (Large Language Models) are all the rage currently, thanks to ChatGPT. In this post we draw your attention to three foundational papers between 2017-2023 that we think every Data Scientist must read.

Ever since its November 2022 release, ChatGPT has taken the world by storm. Given the fast rate of change in this space, one might find it hard to keep up with the pace of iterative development. This is especially true for those who are new to this field or are out of touch with the progress in this domain.

While there have been many important contributions that have made ChatGPT a reality, there are essentially three papers published in the last six years that have had an outsized impact in making LLM's exciting for the world.

So what can you expect to learn from reading these papers? We thought it would be interesting to show a high level overview of each paper with the help of a word cloud.

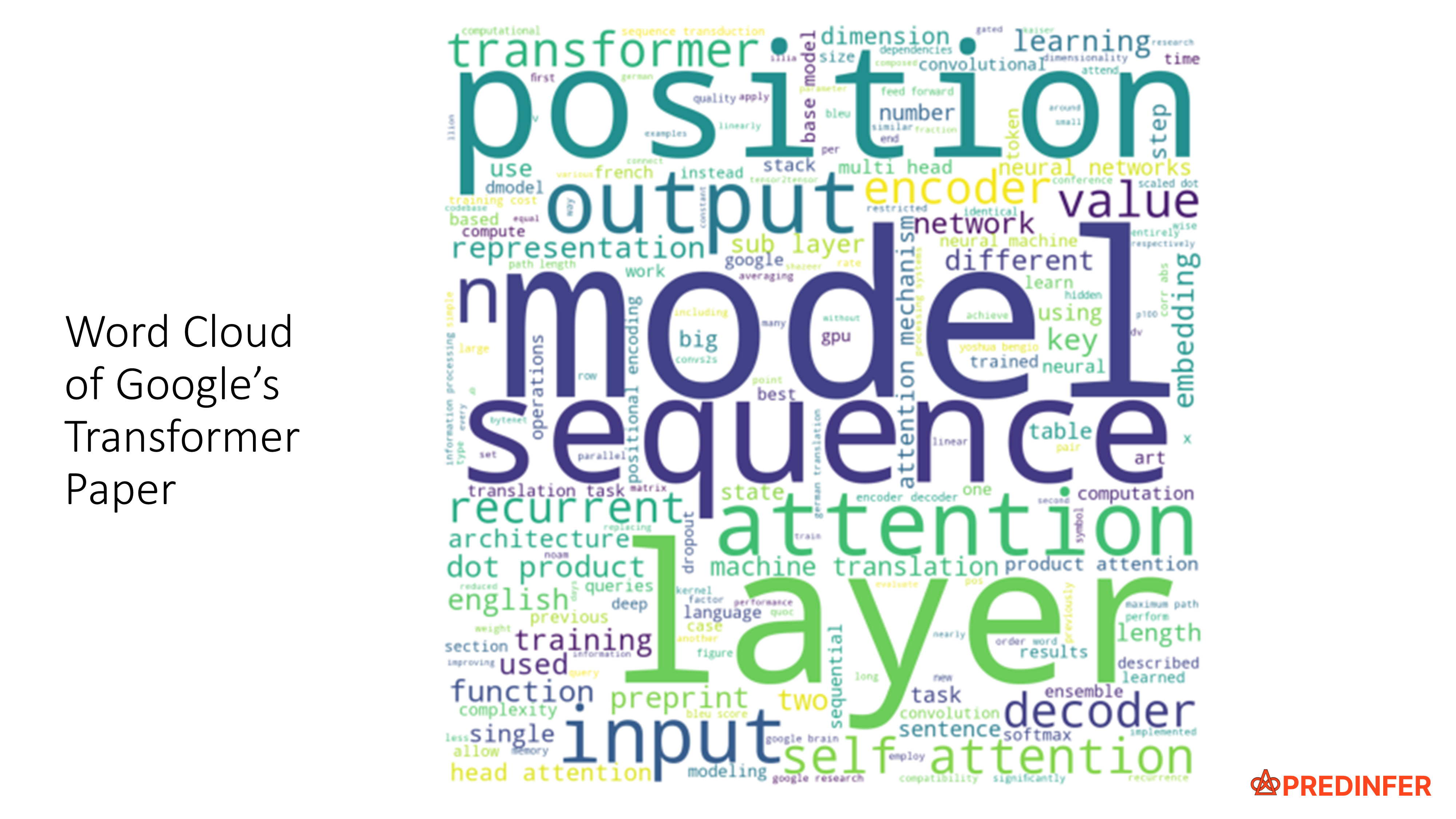

1. 2017: Google publishes the "Transformer" paper

The "Transformer" paper, officially published as "Attention Is All You Need" made the following important contributions:

- Before the transformer paper, RNN's (Recurrent Neural Networks) / LSTM's were the state of the art. The primary drawbacks of these approaches were that they cannot remember an entire sequence of input at once and they could not be parallelized as they required sequences of data to be processed in a fixed order. Transformers solved both of these drawbacks.

- Transformers introduced the concept of

attentioni.e. the ability of a model to pay attention to the most important parts of an input. Moreover, the transformer model does not require sequences of data to be processed in any fixed order making them parallelizable and hence efficient to train. - Transformers introduced an

encoderanddecoderarchitecture that utilizespositional encodingsandmulti-head attention. - An "encoder" learns the context of a language and a "decoder" does specific tasks.

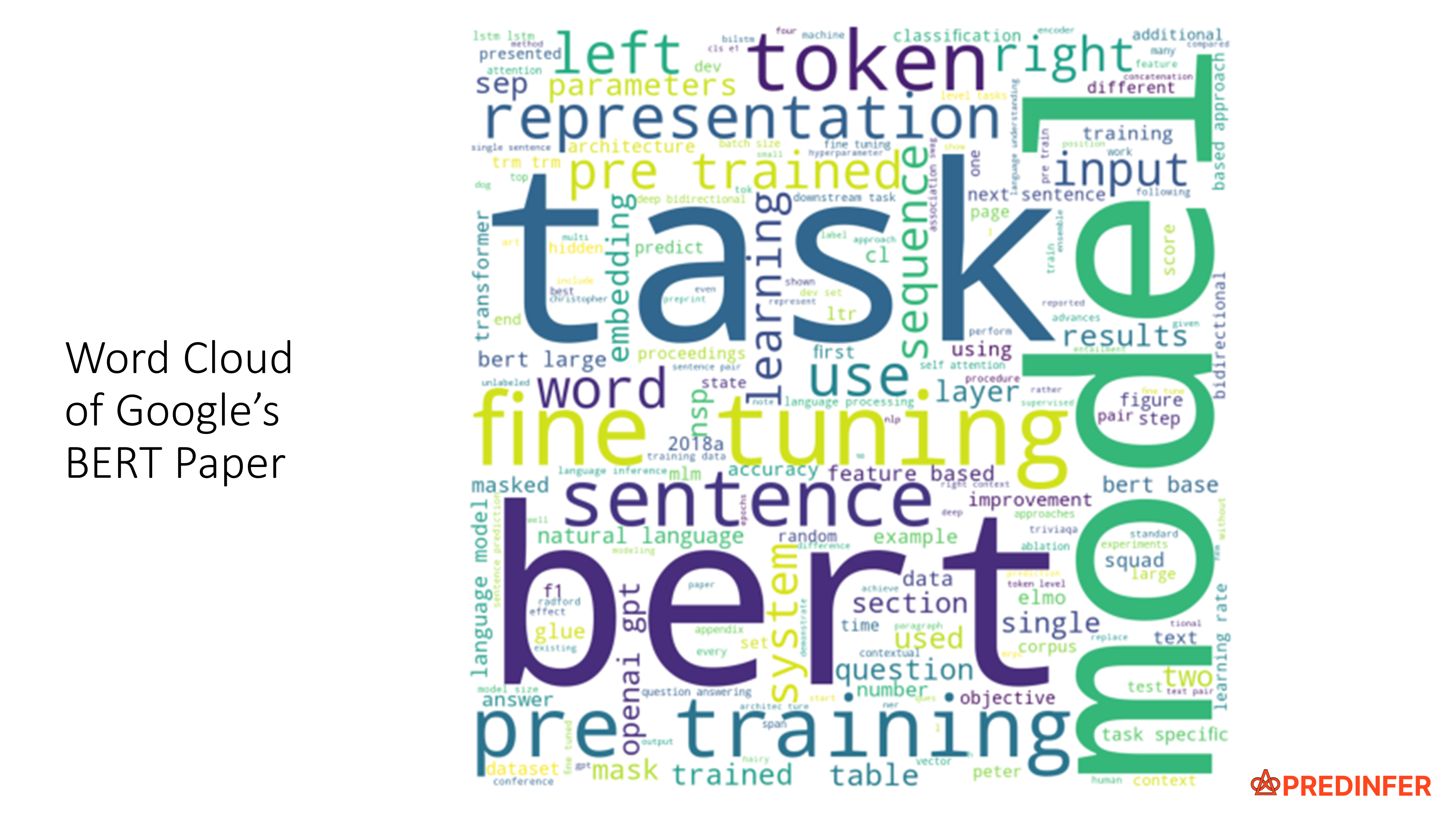

2. 2019: Google publishes the "BERT" paper

The "BERT" paper, officially published as "BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding" made the following important contributions:

-

Following-up on it's success of the transformer paper, Google next released the "BERT" model that is based on the transformer architecture. The key difference compared to transformers is that a

BERT model only utilizes "encoders" and does not use any "decoders". -

BERT models have proven to be very efficient at several NLP tasks such as:

- Sentiment Analysis: E.g. determine if a movie review is positive or negative.

- Question Answering: E.g. ChatGPT style chatbots that respond to input question prompts.

- Text Prediction: E.g. Auto-completion of text when writing a text, email or a search query.

- Text Generation: E.g. ChatGPT generates an article about a given topic based on a simple input query.

- Summarization: E.g. Summarize legal contracts, PDF documents, blog posts etc.

- Polysemy Resolution : E.g. Can differentiate words that have multiple meanings (like ‘bank’) based on the surrounding text.

- Prior to BERT each of these tasks required a separate NLP model.

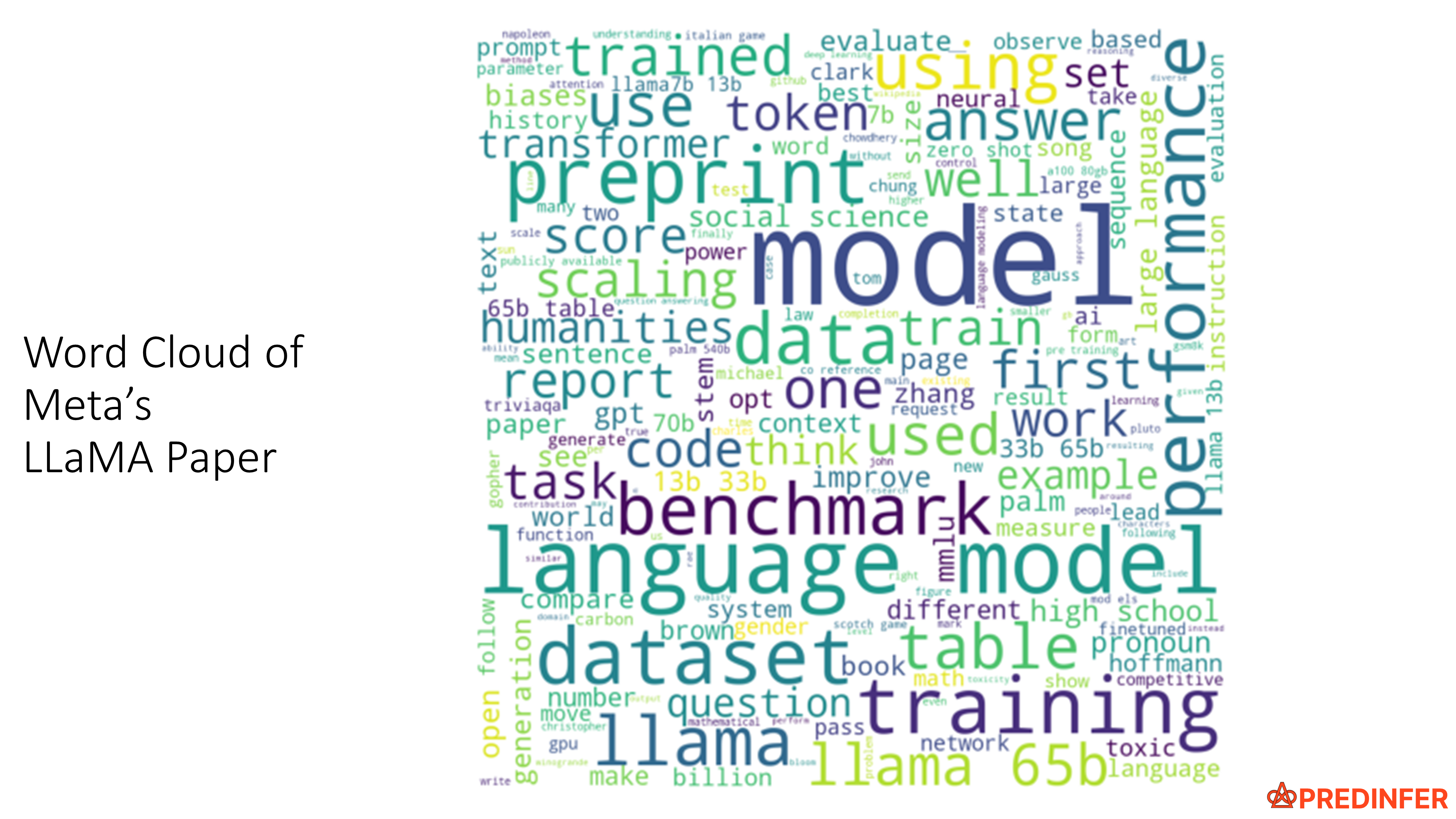

3. 2023: Meta publishes the "LLaMA" paper

The "LLaMA" paper, officially published as "LLaMA: Open and Efficient Foundation Language Models" made the following important contributions:

- Open-sourced a 65 billion parameter model trained on trillions of tokens using only publicly available datasets and comparable in performance to ChatGPT. This is in stark contrast to OpenAI's ChatGPT based models which are closed-source.

- The LLaMA paper helped advance open-source research into LLM's and has led to the release of several open-source assistant-style large language models such as GPT4All.

- The LLaMA model is based on the transformer architecture. However, unlike the BERT model the

LLaMA model only utilizes "decoders" and does not use any "encoders". This is also how ChatGPT works.

One interesting characteristic of all these models is that they are all considered "foundational" & "general purpose" as they are trained on a large dataset of unlabeled data. Many interesting applications come from fine-tuning these models for specific tasks using a machine learning technique known as "transfer learning". See the wildly popular "HuggingFace" community for examples of such fine-tuned models.