September 24, 2023

4 min read

The Problem Of Data Cascades In Data Science

A cascade is a form of a "reinforcing feedback loop" that amplifies changes or trends in only one direction. This unidirectional nature makes it a compounding force. A "Data Cascade" is essentially a series of compounding events that lead to undesirable outcomes due to poor data quality.

In this post, we will look at the key results from a paper published by a group of Google researchers titled "Everyone wants to do the model work, not the data work: Data Cascades in High-Stakes AI".

So why is this a big deal and why should you pay attention? Let's look at some examples of real-world data cascades quoted directly from the paper:

"Eye disease detection models, trained on noise-free training data for high model performance, failed to predict the disease in production upon small specks of dust on images."

"As an example, poor data practices reduced accuracy in IBM’s cancer treatment AI and led to Google Flu Trends missing the flu peak by 140%."

"As an example of a cascade, for P3 and P4 (road safety, India), even the slightest movement of a camera due to environmental conditions resulted in failures in detecting traffic violations, 10 different sources may have undergone changes. Cameras might move from the weather. AI models can fail completely."

"As an example of a cascade, P18 (wildlife conservation, India) described how after deploying their model for making predictions for potential poaching locations, patrollers contested the predicted locations as being incorrect. Upon further collaboration with the patrollers, P18 and team learned that most of the poaching attacks were not included in the data. As the patrollers were already resource-constrained, the mispredictions of the model ran the risk of leading to overpatrolling in specific areas, leading to poaching in other places."

"In some cases, data collection was expensive and could only be done once (e.g., underwater exploration, road safety survey, farmer survey) and yet, application-domain experts could not always be involved. Conventional AI practices like overt reliance on technical expertise and unsubstantiated assumptions of data reliability appeared to set these cascades off."

"Often they forgot to reset their setting on the GPS app and instead of recording every 5 minutes, it was recording the data every 1 hour. Then it is useless, and it messes up my whole ML algorithm."

"As an example of a data cascade, P8 (robotics, US), described how a lack of metadata and collaborators changing schema without understanding context led to a loss of four months of precious medical robotics data collection. As highstakes data tended to be niche and specific, with varying underlying standards and conventions in data collection, even minute changes rendered datasets unusable."

As we can see from the real-world case studies above, some root causes of data cascades include:

- Human errors

- Randomness and unpredictability of nature

- Poor data practices

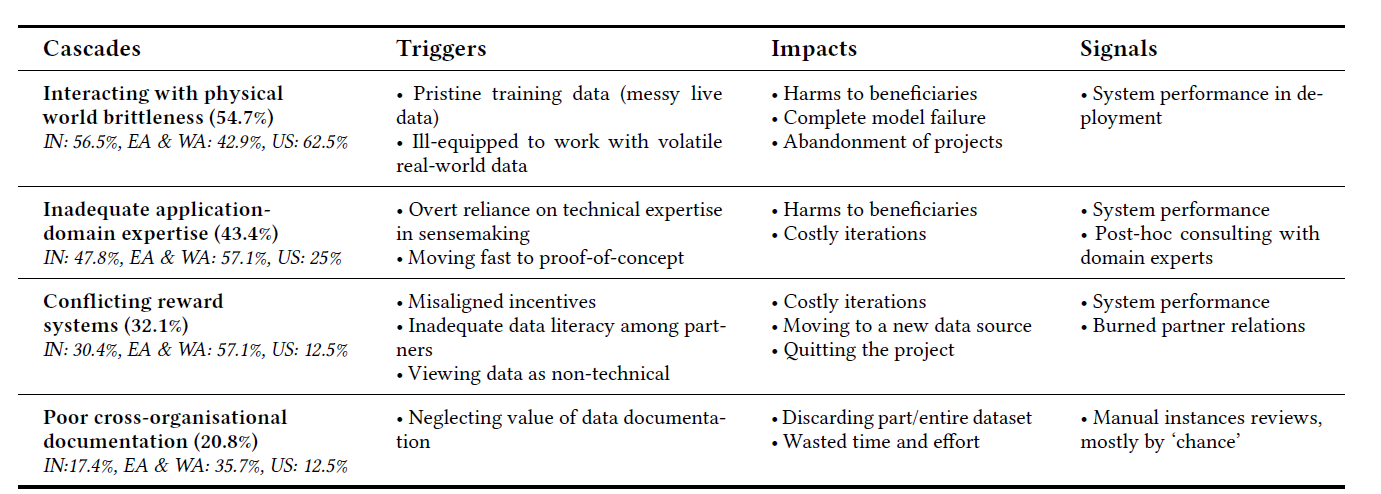

Data cascades often come associated with high costs & high impact. To have a better understanding of cause and effect, let's take a look at the following table from the paper which summarizes the key findings of the research.

In summary, the paper makes the following key suggestions:

- Make sure you watch out for the four types of data cascades listed above along with their triggers, impacts and signals.

- Do not treat data as the most under-valued and de-glamorized aspect of AI.

- Make "Data Excellence" a first-class citizen of Data Science & AI.

- Be prepared to work with real-world datasets that are often ‘dirty’ and come with a variety of data quality problems.

- Remember the GIGO (Garbage In Garbage Out) principle - the quality of output is determined by the quality of the input.

- Focus should also be put on metadata (e.g. origin, time, collection process, weather..) which provides important context about the data.

- There are many opportunities to create "Data Excellence" through "Human-In-The-Loop (HITL)" & "Human-Computer-Interaction (HCI)" based approaches.

- And finally, use tools where possible to detect and correct data issues. Examples of such tools include data linters and testing/validation systems.