April 22, 2023

4 min read

Parquet For Data Science

A Parquet file uses less space for storage, is faster to process and is highly optimized for Data Science workflows. Should you use a Parquet file as your primary file format of choice?

This post aims to answer some common questions related to the open-source Parquet file format such as:

- What are Parquet files?

- What are the benefits of using the Parquet file format?

- How does the Parquet file format compare with other popular formats such as CSV and JSON?

- Should you use Parquet files for Data Science?

What are Parquet files?

Parquet file format offers very efficient compression & encoding of columnar data. This means Parquet files are smaller in size to store and faster to use. See below for benchmarking results of comparing Parquet with CSV & JSON.

Parquet is a binary file format. This means you cannot visually inspect the file in a text editor such as Notepad++.

Parquet originated in the Hadoop ecosystem and uses the record shredding and assembly algorithm described in the Dremel paper. It supports complex nested data structures and offers fast read speeds. This means Parquet is purpose built for Big Data and for scalable Data Processing.

What are the benefits of using the Parquet file format?

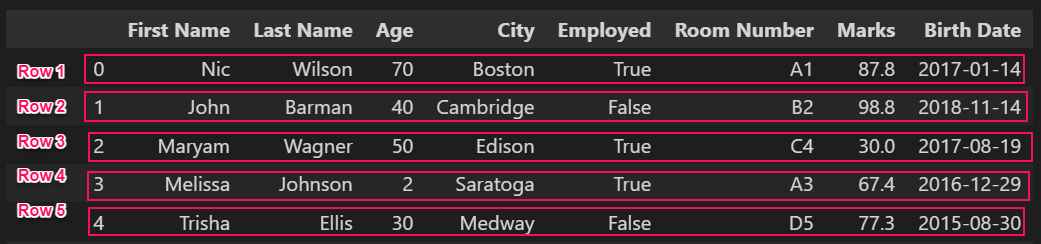

CSV files store data row-wise. For example:

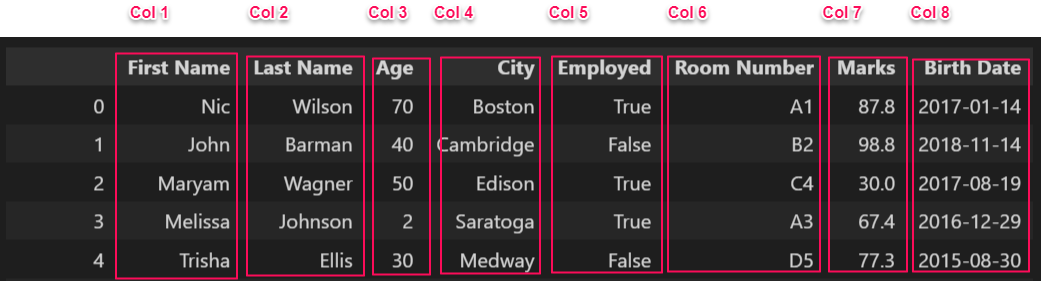

Parquet files store data column wise. For example:

Columnar data storage enables efficient compression of each column since each column represents a single data type. For example, in the example above, "First Name", "Last Name", "City" & "Room Number" columns are textual data stored as type String. "Age" & "Marks" columns are numeric data stored as type Float. "Employed" column has boolean data stored as type Bool. The last column "Birth Data" has datetime data stored as type Datetime.

Another advantage of columnar storage is that similar to databases it enables efficient query and filtering operations such as with predicate pushdown. This enables fast read speeds by filtering data at read-time so only the data you want is read from the disk into memory.

How does the Parquet file format compare with other popular formats such as CSV and JSON?

We did a small experiment as follows:

- Create a DataFrame consisting of 60 million rows and 8 columns.

- DataFrame consists of mixed datatypes: text, numeric, boolean & datetime.

- Measure the time taken to save the DataFrame as CSV, JSON & Parquet files on disk.

- Measure the size of the files created on disk.

- Compare time taken to create file on disk vs size of the file across

CSV,JSON&Parquetfile formats.

Below is a plot of the final results:

For time taken to save DataFrame to file on disk, CSV took 568 seconds, JSON took 82 seconds and Parquet took 42 seconds.

For size of the file on disk, CSV was 2.75 GB, JSON was 8.1 GB and Parquet was 92 MB i.e. 0.09 GB.

Pro Tip: Parquet can save you real $$$ on cloud storage costs due to its per-variable compression efficiency. Also, consider this as the format of choice for log files.

If you would like to try this experiment on your own machine to reproduce the results, check out this Jupyter notebook on our GIT repository.

Should you use Parquet files for Data Science?

The short answer is a resounding YES. Parquet is an open-source and modern file format that was built from the ground up with scalability, efficiency and performance as first citizens.

However, there are some instances where you might not want to use Parquet. For example:

- If the file format must be human readable, CSV & JSON are preferable as Parquet is a binary format.

- If the size of your files/dataset is small enough then you may likely not have size/time constraints. In this case, switching to Parquet may not give you any real benefits.

- Since Parquet is a relatively new file format, it is still not as widely used or compatible with many legacy systems or applications. So if you are sharing your work with others, it might be good to consider trade-offs between moving to Parquet vs sticking with JSON/CSV/Excel.

- Row-oriented formats such as CSV are a better fit when you would like to access most/all of the values in a row/record.