October 21, 2023

5 min read

First, Middle & Last Mile Problems Of Data Science

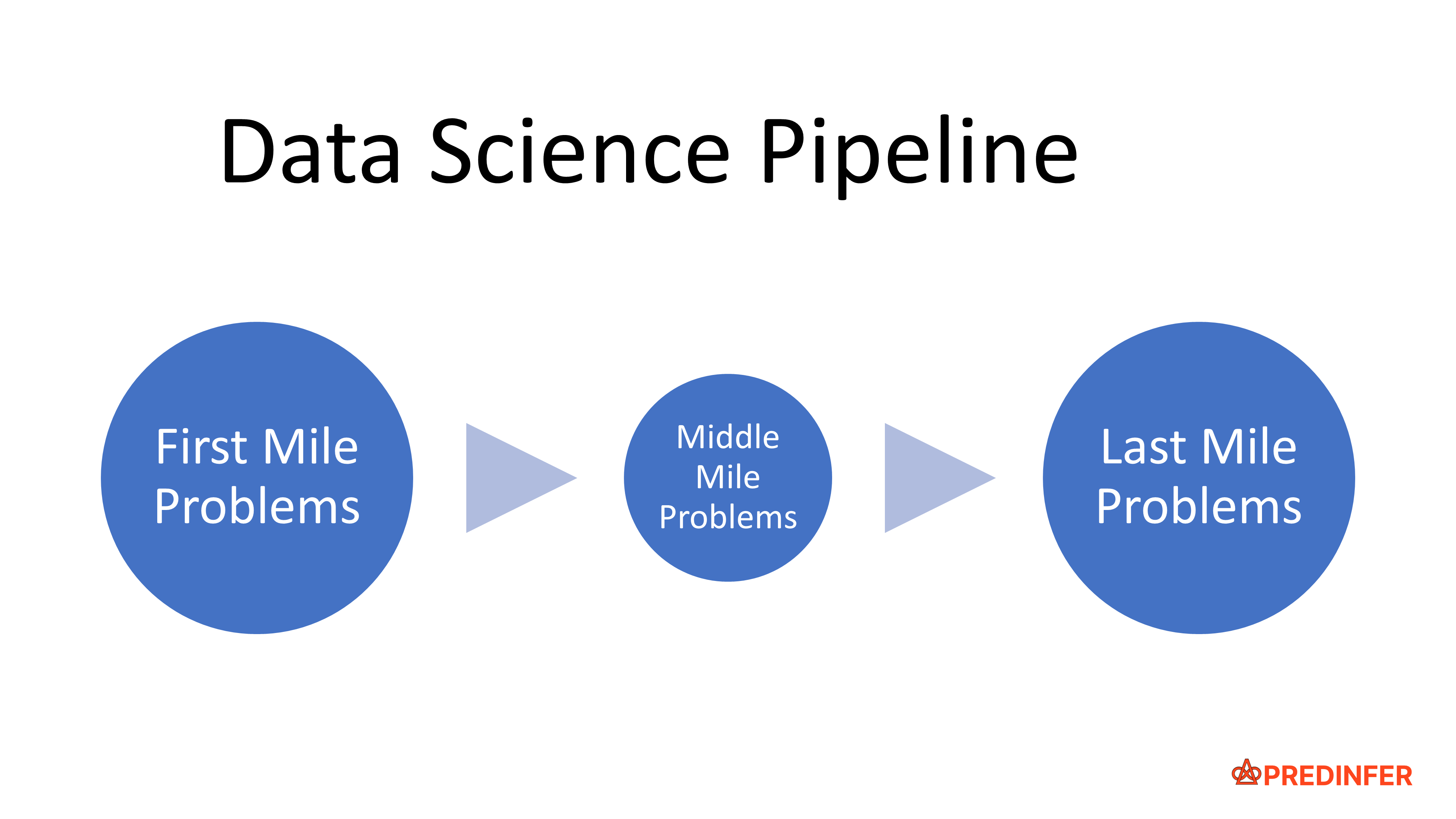

In this post we will explore the "First Mile", "Middle Mile" and "Last Mile" problems of Data Science.

"First Mile and Last Mile problems are like the dark matter of Data Science. They get 20% of the attention but are responsible for 80% of the outcome."

John Tukey considered by many as the father of Data Science, famously said the following about the importance of solving the "right question".

"Far better an approximate answer to the right question, which is often vague, than the exact answer to the wrong question, which can always be made precise." - John Tukey

Cognitive psychologists Christopher Chabris and Daniel Simons in their book "The Invisible Gorilla" talk about a cognitive bias known as "inattentional blindness". As part of their experiment they asked volunteers to keep track of how many times some basketball players tossed a basketball. While the volunteers were intently focused on counting, a person in a gorilla suit walked across the court. Surprisingly, many volunteers didn’t even notice the gorilla.

This serves to illustrate the point that human attention is like a spotlight that does well with focused tasks. However, it is only when you turn on the floodlights that you can see the big picture clearly.

First Mile Problems

The "First Mile" problem of Data Science focuses on the following aspects of the Data Science Pipeline:

- Where did the data come from?

- Do you understand the data?

- What is the data about?

- Do you understand the problem?

- Have you applied the scientific method to the problem?

- What is the "right data" for the problem?

- What are the strengths and limitations of the data?

- Did you take a step back and think deeply about the problem you are confronting?

- How do you describe the data generation problem?

- Do you recognize that correlation does not imply causation?

- Do you recognize that the map is not the territory? Said another way, a dataset (map) only models the real world (territory), so if the data doesn't fit the model the fault is with the dataset (map) not the real world (territory).

First Mile Problems cannot easily be automated as they require human judgement that is based on experiential knowledge.

Middle Mile Problems

The "Middle Mile" problem of Data Science focuses on the following aspects of the Data Science Pipeline:

-

Explicit "text book" knowledge material that is well defined and easy to teach such as:

- Algorithm selection

- Feature selection

- Building the model and explain what the model does.

- Performance metrics i.e. explain why model performance is the way it is.

-

Pattern recognition to understand the specific class of the problem. For example:

- Supervised Learning

- Unsupervised Learning

- Semi-Supervised and Self-Supervised Learning

- Reinforcement Learning

- Time Series Analysis

- Natural Language Processing (NLP)

- Computer Vision

- Anomaly Detection

- Association Rule Learning

-

Apply proven best practices. For example:

- Splitting the dataset into training, validation and test sets

- Applying cross-validation and full cross-validation for assessing the generalization performance of machine learning models

Not surprisingly, the "Middle Mile" is where a majority of Data Science practitioners, Data Science courses and Data Science bootcamps focus their time on.

However, since these problems are well defined and easy to teach they are the part of the pipeline that is most susceptible to automation. To see an example of this kind of automation, check out the amazing "Advanced Data Analysis" feature of ChatGPT Plus.

Last Mile Problems

The "Last Mile" problem is all about bridging the gap between model output and helping your clients/stakeholders achieve their desired outcome.

It focuses on the following aspects of the Data Science Pipeline:

-

How do you integrate with existing systems?

-

How do you tailor the results for human decision makers?

-

What is the target variable?

-

Are you predicting the right target variable?

-

Was sampling done correctly? Was there oversampling? Were any classes neglected?

-

Did you account for biases and hidden assumptions in the results?

-

Is the result actionable?

-

What is the plan of action to operationalize the results?

-

How will the model work against real unseen data?

-

Do you need to go back and iterate on "First Mile" or "Middle Mile" problems?

-

Did you take a step back and think deeply about the results? Do they make sense?

-

Are you solving the right problem? For example, are you focusing on the bigger picture:

- Did the patient get healthier?

- Is the diagnosis more accurate?

- Did the workforce become more effective and more diverse?

Similar to "First Mile " problems "Last Mile" problems also cannot easily be automated as they require human judgement that is based on experiential knowledge.

"You're not paid to create the most accurate output. You are paid to facilitate a larger process that results in a better outcome."

References and further reading: