September 04, 2023

5 min read

5 Key Ideas About Large Language Models (LLMs)

Large Language Models (LLMs) are a Mega Trend. They are here to stay. In this post we will explore five key ideas driving LLMs.

1. Biomimicry

Biomimicry is the practice of imitating life. It involves looking to nature for inspiration and direction to solve complex human problems. So why does this work? Well, if you think about it, nature has been constantly evolving ever since life first appeared on earth some 3.8 billion years ago. Can there be a better and proven source of inspiration than nature?

Now, one of the most complex system that nature has created is the human brain containing an average of 86 billion neurons. Naturally scientists have been studying the human brain for a very long time even going as far back as Gautama Buddha some 2500 years ago who is known as the creator of the "science of the mind".

So what does this have to do with LLMs you may ask? At the core of it, LLMs are based on neural nets which were originally invented in the 1940s. A neural net is basically a form of biomimicry in the form of a machine learning model that teaches computers to process data in a way that mimics the human brain.

Just like the human brain has a complex network of 86 billion neurons that are interconnected and processes information in layers, so does a neural net like ChatGPT that is made of 175 billion parameters/weights which also processes data through a series of layers.

But here's the most interesting part - Just like we don't fully understand how our brain works, so is the case with an LLM like ChatGPT. While we can clearly see that ChatGPT is able to perform complex natural language tasks, we are still figuring out how and why it works so well.

Can ChatGPT ever "truly understand us" or will it just continue to "produce stuff" that it knows we humans find useful?

2. Open Source vs Closed Source

At this point, it has been well established that for a thriving ecosystem we need both open source and closed source options to co-exist. Thankfully, even the LLM space is seeing the simultaneous development of both open source and closed sourced LLMs.

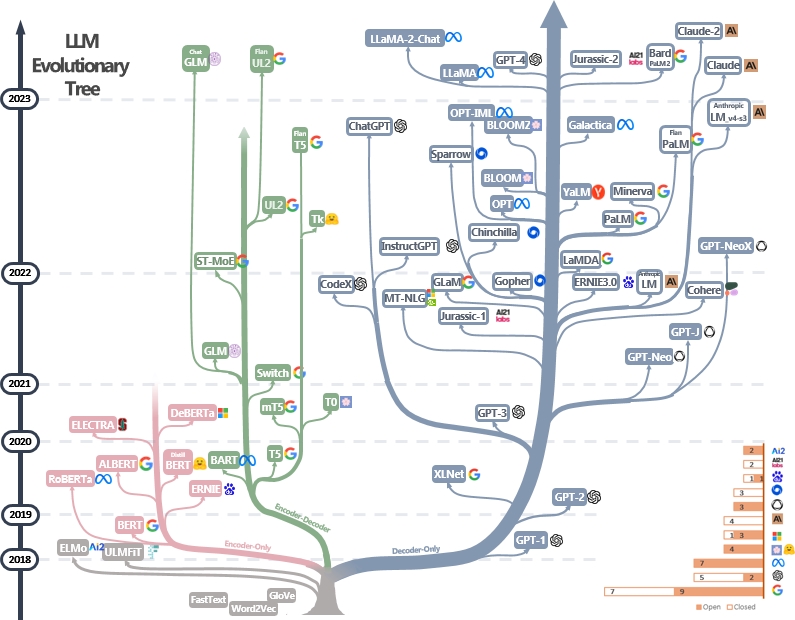

In the LLMsPracticalGuide Github repository we can find the following evolutionary tree of LLMs over the last five years:

Note: one of the most popular open source LLM is the 65 billion parameter LLaMA model by Meta.

For a full list of popular LLM models along with their license types refer to this list of usage and restrictions from LLMsPracticalGuide and the GPT4all.io resources.

3. Hallucinations

Have you encountered any of the following situations?

- A person who has just started learning about a topic believes they know more than someone who has been studying it for years.

- A person who only recently started learning to drive a car believes they are an excellent driver.

- An amateur sports player overestimates their performance in an upcoming tournament.

These are all classic examples of the Dunning-Kruger effect which is a cognitive bias in which people with limited competence in a particular domain overestimate their abilities.

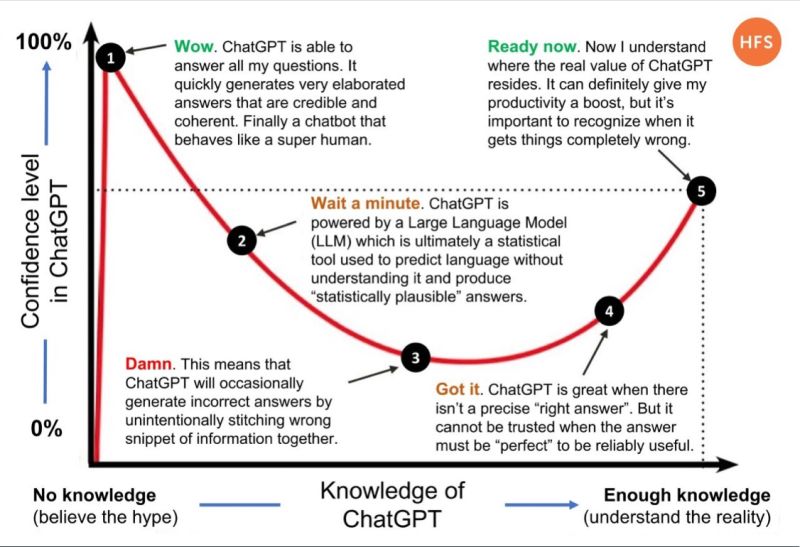

In this post, HFS research looked at ChatGPT through the lens of the Dunning-Kruger effect and came up with the following excellent illustration:

As illustrated beautifully in this image, it is important to recognize the limits of ChatGPT and acknowledge the fact that like any tool ChatGPT has limits.

LLMs are known to "hallucinate" i.e. produce output that is false and made-up. This is no different than the problem of "fake news" that we've been increasingly dealing with as a society.

As with any technology, creators, maintainers and most importantly users of LLMs must exercise caution and recognize that the current rate of hallucinations with chatbots is on average 20-25%.

4. Scientific Breakthrough

At the beginning of this post, we referred to LLMs as a scientific breakthrough and a megatrend. Megatrends are large, long-term shifts that can have a profound impact on the world. They are driven by a combination of technological, economic, social, and political factors, and can last for decades or even centuries.

LLMs are a megatrend and it is here to stay. Don't dismiss the V1 state of a technology. Think of how good the technology can get in V10. For example, think of how far the iPhone has come over the past 16 years. Or for that matter the rate of improvement of GPT-4 over GPT-1.

We are still in the early stages of figuring out all the possibilities and pitfalls from LLMs. Humans tend to fear or dismiss what they don't understand. It may be in our best interest to pay attention to this technology now that the cat is out of the bag and there is no going back.

5. Generative AI

We have now entered the "Era of Generative AI". The whole draw of LLMs have been that they can generate new content about almost anything that's very similar to what humans can create.

Generative AI models work by generating new data samples that are similar to the training data that was used to train the model. They do this by capturing the underlying distribution of the data to produce new and randomized data samples.

Some exciting applications of Generative AI include:

- Text generation - e.g. articles, poetry, Q&A, essays, summaries etc.

- Image generation - e.g. DALL-E model by OpenAI that generates synthetic images from descriptions in natural language.

- Music generation - e.g. create audio in the voices of popular singers based on textual input (fakeyou, elevenlabs).

- Video generation - e.g. Meta's recent make-a-video system that generates videos from text.