September 27, 2023

14 min read

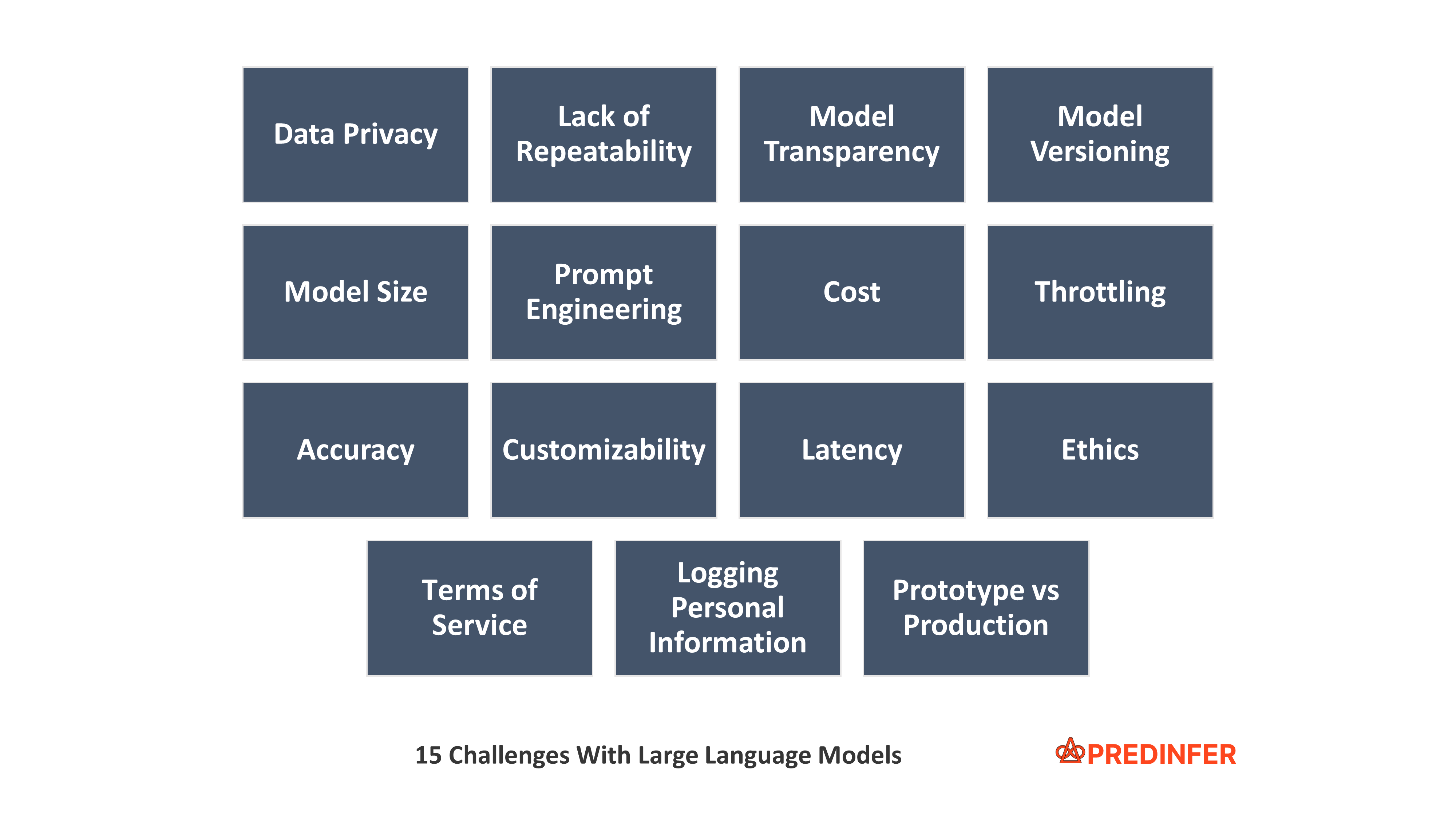

15 Challenges With Large Language Models (LLMs)

Large Language Models (LLMs) are a Mega Trend. They are here to stay. While LLM's have proven to be extremely capable and powerful, they also present a number of challenges that need to be addressed. As Spider-Man would say "With great power comes great responsibility".

1. Data Privacy

Naturally, one of the biggest concerns that a user of an LLM like "ChatGPT" has is that of Data Privacy. Some common questions users often have are:

- Is data submitted used to train and improve the model?

- How long is user data stored on servers?

- How are the companies behind these chatbots complying with GDPR / CCPA / HIPAA laws?

- How much "Personal Identifiable Information (PII)" is collected and stored?

- What options do users have about how their information is stored and processed?

- How can a user trust that the organizations running these chatbots will "Do the right thing"?

Some of the actions companies are taking to address these questions include:

- Giving users the option to turn off chat histories which will also prevent user input from being used to train the models.

- Provide options to export your data, permanently delete your account & opt-out of all model training.

- Getting audited by third party companies for compliance. For example, SOC 2 Type 2 compliant certification.

- Making a promise that user content will not be shared with third parties for marketing purposes.

- Educating users about how the technology works and clarifying the broader vision i.e. "We want our AI models to learn about the world—not private individuals."

These are just some of the user questions around "Data Privacy". Since this is a new and evolving field, the terms are subject to change. See OpenAI Security Portal & Gemini Privacy pages for more details.

2. Lack of Repeatability

The defining characteristic of a Large Language Model is that it is random & indeterminate.

"Our models generate new words each time they are asked a question. They don’t store information in a database for recalling later or “copy and paste” training information when responding to questions." - OpenAI

In fact, LLM's have a "Temperature" parameter that controls the “creativity” or "randomness" of the text generated.

On the contrary, the defining characteristic of software & more generally science is that it is repeatable, reproducible & replicable.

While the creativity and randomness of LLM's is what have made them so popular, it also presents new challenges. For example, how do you add a test when the output is different each time for the same input?

To control randomness, some suggestions include modifying the "temperature" parameter to suit your needs. For example, "Bing Chat" provides the options of More Creative, More Balanced or More Precise.

Another option is to explicitly pass instructions to the chatbot. For example:

"Avoid repeating lines or phrases. All messages must be 2-4 paragraphs long."

3. Model Transparency

Given the cost and complexity involved in running an LLM at scale, it comes as no surprise that big businesses are dominating the LLM space pumping billions of dollars into training and development. This also means that internal workings of popular chat bots like ChatGPT & Google's Gemini are sort of a black box to the end user.

However, at this point, it has been well established that for a thriving ecosystem we need both open source and closed source options to co-exist. Thankfully, even the LLM space is seeing the simultaneous development of both open source and closed sourced LLMs.

One of the most popular open source LLM is the 65 billion parameter LLaMA model by Meta. For a full list of popular LLM models along with their license types refer to this list of usage and restrictions from LLMsPracticalGuide and the GPT4all.io resources.

4. Model Versioning

GPT-3 has 175 billion parameters. GPT-4 has about 1.76 trillion parameters. Both of these models have a knowledge cut-off date of September 2021 as of this writing. As we can see, the goal is to increase the capabilities of these models and also continue to train the models on newer information. This would help bridge the gap between having to use a traditional search engine and ChatGPT together.

If we take a look at OpenAI models, we will see that models are continuously updated. In software this presents a major problem since frequent changes creates instability for users who tend to rely on consistent results. To counter this issue, we can see that OpenAI has released several versions of the same model & provided a transition plan. For example:

- gpt-3.5-turbo-0301

- gpt-4-0314

- gpt-4-32k-0613

- gpt-4-32k-0314 (Legacy)

The naming format can be described as Model Name + Max Tokens + Month/Day in MMDD format

The primary issues being addressed with multiple model versions are:

- Providing frozen snapshots that users can rely on

- Providing a discontinuation date

- Providing an update path for replacement models

- Managing change with transparent communication

Given the dynamic landscape, model versioning and consistency of results will continue to be challenging issues for LLMs.

5. Model Size

A 65 Billion parameter model is approximately 120GB in size. GPT-3 with 175 billion parameters is about 800GB in size. As the number of parameters grows - for example GPT-4 has about 1.76 trillion parameters, the size of the model will continue to grow.

Can you run an LLM locally on your laptop or phone? How much RAM & Disk Space do you need? Given the privacy and latency concerns, this is an area of active research for LLMs.

Libraries such llama.cpp use a technique known as quantization to reduce the size of LLMs. This makes it possible to run LLMs more efficiently on smaller devices. For example, with 4-bit quantization, a 65 billion parameter model is reduced in size from 120 GB to 38.5 GB. This makes it possible to run an LLM locally on a personal laptop.

6. Prompt Engineering

One of the most important aspect of using generative models such as ChatGPT & Gemini is "Prompt Design" also known as "Prompt Engineering". So what is prompt engineering and why does it matter?

“The quality of your life is a direct reflection of the quality of the questions you are asking yourself” - Anthony Robbins

The quality of responses that an LLM like ChatGPT generates is directly correlated to the quality of questions i.e. input prompts provided by the user. This is a fairly new & emerging field of study that aims to enhance the performance of AI models on specific tasks by creating precise and informative questions.

The best way to learn about this is to try out various prompts yourself. The awesome-chatgpt-prompts Github repository has an excellent collection of various prompts that you can try out. For example you can ask ChatGPT to act as various user personas:

- Act as a Linux Terminal

- Act as position Interviewer

- Act as a JavaScript Console

- Act as an Excel Sheet

- Act as a Plagiarism Checker

- Act as a Debater

- Act as a Relationship Coach

- Act as a Philosopher

- Act as a DIY Expert

- Act as a Python interpreter

- Act as a SQL terminal

- Act as a Statistician

- ...

7. Cost

As of April 2023, researchers estimated that ChatGPT costs $700,000/Day to Operate. The cost per query for ChatGPT is estimated to be about 0.36 cents.

ChatGPT became the quickest service ever to reach 100 million users in just two months after its November 2022 launch.

Explosive growth and expensive to operate naturally translated into OpenAI being forced to quickly commercialize ChatGPT by offering "ChatGPT Plus" for $20/month.

But how much does it cost to train a Large Language Model? For this let's look at how LLaMA was trained using some back of the envelope calculations:

- 2048 A100 GPUs to train the model

- Each GPU costs ~$15k

- ~23 days to train LLaMA

- This means, capital costs alone are $30 million

- Electricity costs were estimated as ~$53,000

Given these prohibitively high training & operational costs, we can see why only a handful of large corporations have successfully been able to operate LLM's at scale.

8. Throttling

The problem of throttling can be explained with the "The Tragedy of the Commons" analogy. It basically illustrates that in the absence of private property rights or strict government regulation, shared resources (i.e., the commons) would ultimately be depleted because individuals tend to act selfishly, rushing to harvest as many resources as they can from the commons.

API throttling is a common practice to prevent abuse and ensure fair usage. If a client sends too many requests within a short period of time, the server will refuse to fulfill any further requests until a specified timeout period is reached. OpenAI API rate limits are documented here.

While this is a well understood problem with many suggested mitigations such as exponential backoff and setting individual usage limits, it does present unique challenges for LLM's due to the scale and high cost per query.

9. Accuracy

Google says the following about Gemini:

"Gemini is an experimental technology and may sometimes give inaccurate or inappropriate information that doesn’t represent Google’s views."

"Don’t rely on Gemini’s responses as medical, legal, financial, or other professional advice."

"Don’t include confidential or sensitive information in your Gemini conversations."

Meta says the following about LLaMA:

"Model may in some instances produce inaccurate, biased or other objectionable responses to user prompts."

LLMs are known to "hallucinate" i.e. produce output that is false and made-up. The current rate of hallucinations with chatbots is on average 20-25%.

While research is underway to reduce hallucinations and improve accuracy, it is up to users and organizations to pursue responsible use of LLM's.

10. Customizability

All LLM's are considered "foundational" & "general purpose" as they are trained on a large dataset of unlabeled data. The interesting applications are increasingly coming from fine-tuning these models for specific tasks using a machine learning technique known as transfer learning.

OpenAI launched GPTs to allow users to create custom versions of ChatGPT with no coding knowledge needed. Anyone can customize ChatGPT for a specific purpose and share them publicly on the GPT Store. This will also provide creators the opportunity to earn money based on the number of people using the model.

Consumers and organizations are also eager to adopt the natural language capabilities of LLM's on their personal data & custom applications. There is a growing list of examples of these applications:

- Dropbox Dash

- Evernote AI-Powered Search

- Hugging face models

- ChatGPT Enterprise

- ChatGPT plugins

- Bard plugins

- Duolingo max

- Github Next projects such as Copilot

- ...

11. Latency

The fundamental technology behind an LLM is that it generates one word at a time. Each "next word" is picked based on a probability calculation which takes into account what a human would reasonably expect to write. This means, it is not as simple as just picking the word with the highest probability.

The breakthrough with ChatGPT is the effectiveness of coherent text that is generated. This is made possible by a set of tunable weights or parameters which are about 175 billion in number for GPT-3. This highlights the complexity hidden behind running an LLM at scale.

As LLM's are increasingly being asked to solve complex problems with large amounts of data, one obvious challenge is latency & performance. Based on data so far, LLM latency is directly proportional to the number of output tokens.

A symptom of latency issues is when you experience "timeout errors" which can be due to several factors such as lack of system resources, network interruption, cache corruption etc. Often the only remedy for a user is to ask the LLM to "regenerate it's response" or refresh the page.

12. Ethics

Ethics is a complex branch of philosophy that studies the principles and values that guide the behavior of individuals and groups. It attempts to answer complex questions about right vs wrong & good vs bad.

Meta provided the following ethical consideration statement as part of the LLaMA model release:

Llama 2 is a new technology that carries risks with use. Testing conducted to date has been in English, and has not covered, nor could it cover all scenarios. For these reasons, as with all LLMs, Llama 2’s potential outputs cannot be predicted in advance, and the model may in some instances produce inaccurate, biased or other objectionable responses to user prompts. Therefore, before deploying any applications of Llama 2, developers should perform safety testing and tuning tailored to their specific applications of the model.

In the news, there are several stories of lawsuits for copyright infringement (E.g. Authors sue, Tech companies threaten to sue, individuals sue).

Recently, the U.S. Senate conducted "AI Hearings" and highlight increased need for regulation.

Hollywood writers went on strike over the role of AI and its impact on jobs.

These are just some examples of the serious ethical considerations & questions from the rapid rise of LLMs.

13. Terms of Service

Terms of Service (ToS) refer to the legal agreements between a service provider and a person/organization who wants to use that service. These agreements specify the rules and guidelines that users must agree to and follow in order to use and access the service.

Generally speaking ToS often includes disclaimers and limitations of liability to protect the service provider from potential legal claims and to clarify the extent of the provider's responsibilities.

ToS documents are typically lengthy legal documents that very few people have the time to read. It is within these documents that various legally binding rules are set forth such as those governing "Intellectual Property Protection", "Setting Grounds for Termination", "Payment Terms", "Dispute Resolution" & "Ensuring Compliance".

With LLM's ToS terms are constantly changing and evolving. See the following for further reading.

14. Logging Personal Information

As per OpenAI's privacy policy examples of personal information collected when using their services are as follows:

- Log Data: IP address, browser type and settings, the date and time of the request etc.

- Usage Data: types of content viewed, features used, action taken, type of device, cookies etc.

- There are several other ways in which consumer information is processed including retention of user data for 30 days to prevent abuse.

Recently, OpenAI has provided users the option to turn off chat histories which will also prevent user input from being used to train the models.

While logging of Personal Identifiable Information (PII) for telemetry purposes is common practice and typically requires users to explicitly opt-out, it does once again raise privacy concerns for both consumers and businesses.

15. Prototype vs Production

LLM's like ChatGPT have certainly accelerated prototype development speed. However, given all the issues we've discussed so far, significant issues in deploying production quality products remain.

Production quality products typically have high expectations from users. Several businesses are under tremendous pressure to adopt LLM's for their existing products using services such as ChatGPT Enterprise.

However, as we've seen in this post there are significant problems that also need to be addressed which include:

- Data privacy

- Performance

- Cost

- Accuracy

- Consistency

- Ethics

- Trust

- ...

As Dr. Andrew Ng recently said:

"While the AI landscape is promising, there will be short-term trends and fads. It's essential to differentiate between genuine long-term value and temporary hype."